From 24f8991e817879a19801fcbc172ed4552202a024 Mon Sep 17 00:00:00 2001

From: LeiGong-USTC <gleisa19@mail.ustc.edu.cn>

Date: Sun, 15 Aug 2021 15:47:20 +0800

Subject: [PATCH] init

---

.gitignore | 21 +

LICENSE.txt | 13 +

docs/faqs.md | 29 +

docs/installation.md | 136 +

docs/instructions_lidarseg.md | 149 ++

docs/instructions_nuimages.md | 160 ++

docs/instructions_nuscenes.md | 373 +++

docs/schema_nuimages.md | 162 ++

docs/schema_nuscenes.md | 211 ++

python-sdk/nuimages/__init__.py | 1 +

python-sdk/nuimages/export/export_release.py | 66 +

python-sdk/nuimages/nuimages.py | 769 ++++++

python-sdk/nuimages/scripts/render_images.py | 227 ++

.../nuimages/scripts/render_rare_classes.py | 86 +

python-sdk/nuimages/tests/__init__.py | 0

python-sdk/nuimages/tests/assert_download.py | 46 +

python-sdk/nuimages/tests/test_attributes.py | 115 +

.../nuimages/tests/test_foreign_keys.py | 147 ++

python-sdk/nuimages/utils/__init__.py | 0

python-sdk/nuimages/utils/test_nuimages.py | 26 +

python-sdk/nuimages/utils/utils.py | 106 +

python-sdk/nuscenes/__init__.py | 1 +

python-sdk/nuscenes/can_bus/README.md | 144 ++

python-sdk/nuscenes/can_bus/can_bus_api.py | 263 ++

python-sdk/nuscenes/eval/__init__.py | 0

python-sdk/nuscenes/eval/common/__init__.py | 0

python-sdk/nuscenes/eval/common/config.py | 37 +

.../nuscenes/eval/common/data_classes.py | 149 ++

python-sdk/nuscenes/eval/common/loaders.py | 284 ++

python-sdk/nuscenes/eval/common/render.py | 68 +

python-sdk/nuscenes/eval/common/utils.py | 169 ++

python-sdk/nuscenes/eval/detection/README.md | 269 ++

.../nuscenes/eval/detection/__init__.py | 0

python-sdk/nuscenes/eval/detection/algo.py | 189 ++

python-sdk/nuscenes/eval/detection/config.py | 29 +

.../configs/detection_cvpr_2019.json | 21 +

.../nuscenes/eval/detection/constants.py | 50 +

.../nuscenes/eval/detection/data_classes.py | 425 +++

.../nuscenes/eval/detection/evaluate.py | 302 +++

python-sdk/nuscenes/eval/detection/render.py | 338 +++

.../nuscenes/eval/detection/tests/__init__.py | 0

.../eval/detection/tests/test_algo.py | 428 +++

.../eval/detection/tests/test_data_classes.py | 117 +

.../eval/detection/tests/test_evaluate.py | 134 +

.../eval/detection/tests/test_loader.py | 194 ++

.../eval/detection/tests/test_utils.py | 225 ++

python-sdk/nuscenes/eval/detection/utils.py | 56 +

python-sdk/nuscenes/eval/lidarseg/README.md | 217 ++

python-sdk/nuscenes/eval/lidarseg/__init__.py | 0

python-sdk/nuscenes/eval/lidarseg/evaluate.py | 158 ++

.../nuscenes/eval/lidarseg/tests/__init__.py | 0

python-sdk/nuscenes/eval/lidarseg/utils.py | 331 +++

.../eval/lidarseg/validate_submission.py | 137 +

python-sdk/nuscenes/eval/prediction/README.md | 91 +

.../nuscenes/eval/prediction/__init__.py | 0

.../prediction/baseline_model_inference.py | 55 +

.../eval/prediction/compute_metrics.py | 67 +

python-sdk/nuscenes/eval/prediction/config.py | 58 +

.../prediction/configs/predict_2020_icra.json | 53 +

.../nuscenes/eval/prediction/data_classes.py | 75 +

.../prediction/docker_container/README.md | 74 +

.../docker_container/docker/Dockerfile | 40 +

.../docker/docker-compose.yml | 17 +

.../nuscenes/eval/prediction/metrics.py | 468 ++++

python-sdk/nuscenes/eval/prediction/splits.py | 41 +

.../eval/prediction/submission/__init__.py | 0

.../prediction/submission/do_inference.py | 81 +

.../prediction/submission/extra_packages.txt | 0

.../eval/prediction/tests/__init__.py | 0

.../eval/prediction/tests/test_dataclasses.py | 18 +

.../eval/prediction/tests/test_metrics.py | 331 +++

python-sdk/nuscenes/eval/tracking/README.md | 351 +++

python-sdk/nuscenes/eval/tracking/__init__.py | 0

python-sdk/nuscenes/eval/tracking/algo.py | 333 +++

.../tracking/configs/tracking_nips_2019.json | 35 +

.../nuscenes/eval/tracking/constants.py | 56 +

.../nuscenes/eval/tracking/data_classes.py | 350 +++

python-sdk/nuscenes/eval/tracking/evaluate.py | 272 ++

python-sdk/nuscenes/eval/tracking/loaders.py | 170 ++

python-sdk/nuscenes/eval/tracking/metrics.py | 202 ++

python-sdk/nuscenes/eval/tracking/mot.py | 131 +

python-sdk/nuscenes/eval/tracking/render.py | 165 ++

.../nuscenes/eval/tracking/tests/__init__.py | 0

.../nuscenes/eval/tracking/tests/scenarios.py | 93 +

.../nuscenes/eval/tracking/tests/test_algo.py | 297 +++

.../eval/tracking/tests/test_evaluate.py | 234 ++

python-sdk/nuscenes/eval/tracking/utils.py | 172 ++

python-sdk/nuscenes/lidarseg/__init__.py | 0

.../nuscenes/lidarseg/class_histogram.py | 199 ++

.../nuscenes/lidarseg/lidarseg_utils.py | 218 ++

python-sdk/nuscenes/map_expansion/__init__.py | 0

.../map_expansion/arcline_path_utils.py | 283 ++

python-sdk/nuscenes/map_expansion/bitmap.py | 75 +

python-sdk/nuscenes/map_expansion/map_api.py | 2296 +++++++++++++++++

.../nuscenes/map_expansion/tests/__init__.py | 0

.../map_expansion/tests/test_all_maps.py | 88 +

.../tests/test_arcline_path_utils.py | 133 +

python-sdk/nuscenes/map_expansion/utils.py | 142 +

python-sdk/nuscenes/nuscenes.py | 2152 +++++++++++++++

python-sdk/nuscenes/prediction/__init__.py | 1 +

python-sdk/nuscenes/prediction/helper.py | 424 +++

.../input_representation/__init__.py | 0

.../prediction/input_representation/agents.py | 276 ++

.../input_representation/combinators.py | 53 +

.../input_representation/interface.py | 54 +

.../input_representation/static_layers.py | 290 +++

.../input_representation/tests/__init__.py | 0

.../input_representation/tests/test_agents.py | 161 ++

.../tests/test_combinators.py | 32 +

.../tests/test_static_layers.py | 88 +

.../input_representation/tests/test_utils.py | 80 +

.../prediction/input_representation/utils.py | 73 +

.../nuscenes/prediction/models/__init__.py | 0

.../nuscenes/prediction/models/backbone.py | 91 +

.../nuscenes/prediction/models/covernet.py | 120 +

python-sdk/nuscenes/prediction/models/mtp.py | 264 ++

.../nuscenes/prediction/models/physics.py | 199 ++

.../nuscenes/prediction/tests/__init__.py | 0

.../nuscenes/prediction/tests/run_covernet.py | 92 +

.../prediction/tests/run_image_generation.py | 117 +

.../nuscenes/prediction/tests/run_mtp.py | 109 +

.../prediction/tests/test_backbone.py | 52 +

.../prediction/tests/test_covernet.py | 81 +

.../nuscenes/prediction/tests/test_mtp.py | 59 +

.../prediction/tests/test_mtp_loss.py | 183 ++

.../prediction/tests/test_physics_models.py | 78 +

.../prediction/tests/test_predict_helper.py | 496 ++++

python-sdk/nuscenes/scripts/README.md | 1 +

python-sdk/nuscenes/scripts/__init__.py | 0

.../scripts/export_2d_annotations_as_json.py | 207 ++

.../scripts/export_egoposes_on_map.py | 57 +

python-sdk/nuscenes/scripts/export_kitti.py | 362 +++

.../scripts/export_pointclouds_as_obj.py | 208 ++

python-sdk/nuscenes/scripts/export_poses.py | 208 ++

.../nuscenes/scripts/export_scene_videos.py | 55 +

python-sdk/nuscenes/tests/__init__.py | 0

python-sdk/nuscenes/tests/assert_download.py | 51 +

python-sdk/nuscenes/tests/test_lidarseg.py | 41 +

python-sdk/nuscenes/tests/test_nuscenes.py | 26 +

.../nuscenes/tests/test_predict_helper.py | 669 +++++

python-sdk/nuscenes/utils/__init__.py | 0

python-sdk/nuscenes/utils/color_map.py | 45 +

python-sdk/nuscenes/utils/data_classes.py | 686 +++++

python-sdk/nuscenes/utils/geometry_utils.py | 145 ++

python-sdk/nuscenes/utils/kitti.py | 554 ++++

python-sdk/nuscenes/utils/map_mask.py | 114 +

python-sdk/nuscenes/utils/splits.py | 218 ++

python-sdk/nuscenes/utils/tests/__init__.py | 0

.../utils/tests/test_geometry_utils.py | 115 +

.../nuscenes/utils/tests/test_map_mask.py | 126 +

python-sdk/tutorials/README.md | 4 +

python-sdk/tutorials/can_bus_tutorial.ipynb | 225 ++

.../tutorials/map_expansion_tutorial.ipynb | 1137 ++++++++

python-sdk/tutorials/nuimages_tutorial.ipynb | 526 ++++

.../nuscenes_lidarseg_tutorial.ipynb | 506 ++++

python-sdk/tutorials/nuscenes_tutorial.ipynb | 1337 ++++++++++

.../tutorials/prediction_tutorial.ipynb | 698 +++++

python-sdk/tutorials/trajectory.gif | Bin 0 -> 365639 bytes

setup/Dockerfile | 30 +

setup/Jenkinsfile | 189 ++

setup/requirements.txt | 4 +

setup/requirements/requirements_base.txt | 13 +

setup/requirements/requirements_nuimages.txt | 1 +

.../requirements/requirements_prediction.txt | 2 +

setup/requirements/requirements_tracking.txt | 2 +

setup/setup.py | 60 +

setup/test_tutorial.sh | 29 +

167 files changed, 29618 insertions(+)

create mode 100644 .gitignore

create mode 100644 LICENSE.txt

create mode 100644 docs/faqs.md

create mode 100644 docs/installation.md

create mode 100644 docs/instructions_lidarseg.md

create mode 100644 docs/instructions_nuimages.md

create mode 100644 docs/instructions_nuscenes.md

create mode 100644 docs/schema_nuimages.md

create mode 100644 docs/schema_nuscenes.md

create mode 100644 python-sdk/nuimages/__init__.py

create mode 100644 python-sdk/nuimages/export/export_release.py

create mode 100644 python-sdk/nuimages/nuimages.py

create mode 100644 python-sdk/nuimages/scripts/render_images.py

create mode 100644 python-sdk/nuimages/scripts/render_rare_classes.py

create mode 100644 python-sdk/nuimages/tests/__init__.py

create mode 100644 python-sdk/nuimages/tests/assert_download.py

create mode 100644 python-sdk/nuimages/tests/test_attributes.py

create mode 100644 python-sdk/nuimages/tests/test_foreign_keys.py

create mode 100644 python-sdk/nuimages/utils/__init__.py

create mode 100644 python-sdk/nuimages/utils/test_nuimages.py

create mode 100644 python-sdk/nuimages/utils/utils.py

create mode 100644 python-sdk/nuscenes/__init__.py

create mode 100644 python-sdk/nuscenes/can_bus/README.md

create mode 100644 python-sdk/nuscenes/can_bus/can_bus_api.py

create mode 100644 python-sdk/nuscenes/eval/__init__.py

create mode 100644 python-sdk/nuscenes/eval/common/__init__.py

create mode 100644 python-sdk/nuscenes/eval/common/config.py

create mode 100644 python-sdk/nuscenes/eval/common/data_classes.py

create mode 100644 python-sdk/nuscenes/eval/common/loaders.py

create mode 100644 python-sdk/nuscenes/eval/common/render.py

create mode 100644 python-sdk/nuscenes/eval/common/utils.py

create mode 100644 python-sdk/nuscenes/eval/detection/README.md

create mode 100644 python-sdk/nuscenes/eval/detection/__init__.py

create mode 100644 python-sdk/nuscenes/eval/detection/algo.py

create mode 100644 python-sdk/nuscenes/eval/detection/config.py

create mode 100644 python-sdk/nuscenes/eval/detection/configs/detection_cvpr_2019.json

create mode 100644 python-sdk/nuscenes/eval/detection/constants.py

create mode 100644 python-sdk/nuscenes/eval/detection/data_classes.py

create mode 100644 python-sdk/nuscenes/eval/detection/evaluate.py

create mode 100644 python-sdk/nuscenes/eval/detection/render.py

create mode 100644 python-sdk/nuscenes/eval/detection/tests/__init__.py

create mode 100644 python-sdk/nuscenes/eval/detection/tests/test_algo.py

create mode 100644 python-sdk/nuscenes/eval/detection/tests/test_data_classes.py

create mode 100644 python-sdk/nuscenes/eval/detection/tests/test_evaluate.py

create mode 100644 python-sdk/nuscenes/eval/detection/tests/test_loader.py

create mode 100644 python-sdk/nuscenes/eval/detection/tests/test_utils.py

create mode 100644 python-sdk/nuscenes/eval/detection/utils.py

create mode 100644 python-sdk/nuscenes/eval/lidarseg/README.md

create mode 100644 python-sdk/nuscenes/eval/lidarseg/__init__.py

create mode 100644 python-sdk/nuscenes/eval/lidarseg/evaluate.py

create mode 100644 python-sdk/nuscenes/eval/lidarseg/tests/__init__.py

create mode 100644 python-sdk/nuscenes/eval/lidarseg/utils.py

create mode 100644 python-sdk/nuscenes/eval/lidarseg/validate_submission.py

create mode 100644 python-sdk/nuscenes/eval/prediction/README.md

create mode 100644 python-sdk/nuscenes/eval/prediction/__init__.py

create mode 100644 python-sdk/nuscenes/eval/prediction/baseline_model_inference.py

create mode 100644 python-sdk/nuscenes/eval/prediction/compute_metrics.py

create mode 100644 python-sdk/nuscenes/eval/prediction/config.py

create mode 100644 python-sdk/nuscenes/eval/prediction/configs/predict_2020_icra.json

create mode 100644 python-sdk/nuscenes/eval/prediction/data_classes.py

create mode 100644 python-sdk/nuscenes/eval/prediction/docker_container/README.md

create mode 100644 python-sdk/nuscenes/eval/prediction/docker_container/docker/Dockerfile

create mode 100644 python-sdk/nuscenes/eval/prediction/docker_container/docker/docker-compose.yml

create mode 100644 python-sdk/nuscenes/eval/prediction/metrics.py

create mode 100644 python-sdk/nuscenes/eval/prediction/splits.py

create mode 100644 python-sdk/nuscenes/eval/prediction/submission/__init__.py

create mode 100644 python-sdk/nuscenes/eval/prediction/submission/do_inference.py

create mode 100644 python-sdk/nuscenes/eval/prediction/submission/extra_packages.txt

create mode 100644 python-sdk/nuscenes/eval/prediction/tests/__init__.py

create mode 100644 python-sdk/nuscenes/eval/prediction/tests/test_dataclasses.py

create mode 100644 python-sdk/nuscenes/eval/prediction/tests/test_metrics.py

create mode 100644 python-sdk/nuscenes/eval/tracking/README.md

create mode 100644 python-sdk/nuscenes/eval/tracking/__init__.py

create mode 100644 python-sdk/nuscenes/eval/tracking/algo.py

create mode 100644 python-sdk/nuscenes/eval/tracking/configs/tracking_nips_2019.json

create mode 100644 python-sdk/nuscenes/eval/tracking/constants.py

create mode 100644 python-sdk/nuscenes/eval/tracking/data_classes.py

create mode 100644 python-sdk/nuscenes/eval/tracking/evaluate.py

create mode 100644 python-sdk/nuscenes/eval/tracking/loaders.py

create mode 100644 python-sdk/nuscenes/eval/tracking/metrics.py

create mode 100644 python-sdk/nuscenes/eval/tracking/mot.py

create mode 100644 python-sdk/nuscenes/eval/tracking/render.py

create mode 100644 python-sdk/nuscenes/eval/tracking/tests/__init__.py

create mode 100644 python-sdk/nuscenes/eval/tracking/tests/scenarios.py

create mode 100644 python-sdk/nuscenes/eval/tracking/tests/test_algo.py

create mode 100644 python-sdk/nuscenes/eval/tracking/tests/test_evaluate.py

create mode 100644 python-sdk/nuscenes/eval/tracking/utils.py

create mode 100644 python-sdk/nuscenes/lidarseg/__init__.py

create mode 100644 python-sdk/nuscenes/lidarseg/class_histogram.py

create mode 100644 python-sdk/nuscenes/lidarseg/lidarseg_utils.py

create mode 100644 python-sdk/nuscenes/map_expansion/__init__.py

create mode 100644 python-sdk/nuscenes/map_expansion/arcline_path_utils.py

create mode 100644 python-sdk/nuscenes/map_expansion/bitmap.py

create mode 100644 python-sdk/nuscenes/map_expansion/map_api.py

create mode 100644 python-sdk/nuscenes/map_expansion/tests/__init__.py

create mode 100644 python-sdk/nuscenes/map_expansion/tests/test_all_maps.py

create mode 100644 python-sdk/nuscenes/map_expansion/tests/test_arcline_path_utils.py

create mode 100644 python-sdk/nuscenes/map_expansion/utils.py

create mode 100644 python-sdk/nuscenes/nuscenes.py

create mode 100644 python-sdk/nuscenes/prediction/__init__.py

create mode 100644 python-sdk/nuscenes/prediction/helper.py

create mode 100644 python-sdk/nuscenes/prediction/input_representation/__init__.py

create mode 100644 python-sdk/nuscenes/prediction/input_representation/agents.py

create mode 100644 python-sdk/nuscenes/prediction/input_representation/combinators.py

create mode 100644 python-sdk/nuscenes/prediction/input_representation/interface.py

create mode 100644 python-sdk/nuscenes/prediction/input_representation/static_layers.py

create mode 100644 python-sdk/nuscenes/prediction/input_representation/tests/__init__.py

create mode 100644 python-sdk/nuscenes/prediction/input_representation/tests/test_agents.py

create mode 100644 python-sdk/nuscenes/prediction/input_representation/tests/test_combinators.py

create mode 100644 python-sdk/nuscenes/prediction/input_representation/tests/test_static_layers.py

create mode 100644 python-sdk/nuscenes/prediction/input_representation/tests/test_utils.py

create mode 100644 python-sdk/nuscenes/prediction/input_representation/utils.py

create mode 100644 python-sdk/nuscenes/prediction/models/__init__.py

create mode 100644 python-sdk/nuscenes/prediction/models/backbone.py

create mode 100644 python-sdk/nuscenes/prediction/models/covernet.py

create mode 100644 python-sdk/nuscenes/prediction/models/mtp.py

create mode 100644 python-sdk/nuscenes/prediction/models/physics.py

create mode 100644 python-sdk/nuscenes/prediction/tests/__init__.py

create mode 100644 python-sdk/nuscenes/prediction/tests/run_covernet.py

create mode 100644 python-sdk/nuscenes/prediction/tests/run_image_generation.py

create mode 100644 python-sdk/nuscenes/prediction/tests/run_mtp.py

create mode 100644 python-sdk/nuscenes/prediction/tests/test_backbone.py

create mode 100644 python-sdk/nuscenes/prediction/tests/test_covernet.py

create mode 100644 python-sdk/nuscenes/prediction/tests/test_mtp.py

create mode 100644 python-sdk/nuscenes/prediction/tests/test_mtp_loss.py

create mode 100644 python-sdk/nuscenes/prediction/tests/test_physics_models.py

create mode 100644 python-sdk/nuscenes/prediction/tests/test_predict_helper.py

create mode 100644 python-sdk/nuscenes/scripts/README.md

create mode 100644 python-sdk/nuscenes/scripts/__init__.py

create mode 100644 python-sdk/nuscenes/scripts/export_2d_annotations_as_json.py

create mode 100644 python-sdk/nuscenes/scripts/export_egoposes_on_map.py

create mode 100644 python-sdk/nuscenes/scripts/export_kitti.py

create mode 100644 python-sdk/nuscenes/scripts/export_pointclouds_as_obj.py

create mode 100644 python-sdk/nuscenes/scripts/export_poses.py

create mode 100644 python-sdk/nuscenes/scripts/export_scene_videos.py

create mode 100644 python-sdk/nuscenes/tests/__init__.py

create mode 100644 python-sdk/nuscenes/tests/assert_download.py

create mode 100644 python-sdk/nuscenes/tests/test_lidarseg.py

create mode 100644 python-sdk/nuscenes/tests/test_nuscenes.py

create mode 100644 python-sdk/nuscenes/tests/test_predict_helper.py

create mode 100644 python-sdk/nuscenes/utils/__init__.py

create mode 100644 python-sdk/nuscenes/utils/color_map.py

create mode 100644 python-sdk/nuscenes/utils/data_classes.py

create mode 100644 python-sdk/nuscenes/utils/geometry_utils.py

create mode 100644 python-sdk/nuscenes/utils/kitti.py

create mode 100644 python-sdk/nuscenes/utils/map_mask.py

create mode 100644 python-sdk/nuscenes/utils/splits.py

create mode 100644 python-sdk/nuscenes/utils/tests/__init__.py

create mode 100644 python-sdk/nuscenes/utils/tests/test_geometry_utils.py

create mode 100644 python-sdk/nuscenes/utils/tests/test_map_mask.py

create mode 100644 python-sdk/tutorials/README.md

create mode 100644 python-sdk/tutorials/can_bus_tutorial.ipynb

create mode 100644 python-sdk/tutorials/map_expansion_tutorial.ipynb

create mode 100644 python-sdk/tutorials/nuimages_tutorial.ipynb

create mode 100644 python-sdk/tutorials/nuscenes_lidarseg_tutorial.ipynb

create mode 100644 python-sdk/tutorials/nuscenes_tutorial.ipynb

create mode 100644 python-sdk/tutorials/prediction_tutorial.ipynb

create mode 100644 python-sdk/tutorials/trajectory.gif

create mode 100644 setup/Dockerfile

create mode 100644 setup/Jenkinsfile

create mode 100644 setup/requirements.txt

create mode 100644 setup/requirements/requirements_base.txt

create mode 100644 setup/requirements/requirements_nuimages.txt

create mode 100644 setup/requirements/requirements_prediction.txt

create mode 100644 setup/requirements/requirements_tracking.txt

create mode 100644 setup/setup.py

create mode 100755 setup/test_tutorial.sh

diff --git a/.gitignore b/.gitignore

new file mode 100644

index 0000000..97addba

--- /dev/null

+++ b/.gitignore

@@ -0,0 +1,21 @@

+*.brf

+*.gz

+*.log

+*.aux

+*.pdf

+*.pyc

+*.png

+*.jpg

+*ipynb_*

+*._*

+*.so

+*.o

+*.pth.tar

+bbox.c

+doc

+.DS_STORE

+.DS_Store

+.idea

+.project

+.pydevproject

+_ext

diff --git a/LICENSE.txt b/LICENSE.txt

new file mode 100644

index 0000000..9d987e9

--- /dev/null

+++ b/LICENSE.txt

@@ -0,0 +1,13 @@

+Copyright 2019 Aptiv

+

+Licensed under the Apache License, Version 2.0 (the "License");

+you may not use this file except in compliance with the License.

+You may obtain a copy of the License at

+

+ http://www.apache.org/licenses/LICENSE-2.0

+

+Unless required by applicable law or agreed to in writing, software

+distributed under the License is distributed on an "AS IS" BASIS,

+WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+See the License for the specific language governing permissions and

+limitations under the License.

diff --git a/docs/faqs.md b/docs/faqs.md

new file mode 100644

index 0000000..539aeed

--- /dev/null

+++ b/docs/faqs.md

@@ -0,0 +1,29 @@

+# Frequently asked questions

+On this page we try to answer questions frequently asked by our users.

+

+- How can I get in contact?

+ - For questions about commercialization, collaboration and marketing, please contact [nuScenes@nuTonomy.com](mailto:nuScenes@nuTonomy.com).

+ - For issues and bugs *with the devkit*, file an issue on [Github](https://github.com/nutonomy/nuscenes-devkit/issues).

+ - For any other questions, please post in the [nuScenes user forum](https://forum.nuscenes.org/).

+

+- Can I use nuScenes and nuImages for free?

+ - For non-commercial use [nuScenes and nuImages are free](https://www.nuscenes.org/terms-of-use), e.g. for educational use and some research use.

+ - For commercial use please contact [nuScenes@nuTonomy.com](mailto:nuScenes@nuTonomy.com). To allow startups to use our dataset, we adjust the pricing terms to the use case and company size.

+

+- How can I participate in the nuScenes challenges?

+ - See the overview site for the [object detection challenge](https://www.nuscenes.org/object-detection).

+ - See the overview site for the [tracking challenge](https://www.nuscenes.org/tracking).

+ - See the overview site for the [prediction challenge](https://www.nuscenes.org/prediction).

+

+- How can I get more information on the sensors used?

+ - Read the [Data collection](https://www.nuscenes.org/data-collection) page.

+ - Note that we do not *publicly* reveal the vendor name and model to avoid endorsing a particular vendor. All sensors are publicly available from third-party vendors.

+ - For more information, please contact [nuScenes@nuTonomy.com](mailto:nuScenes@nuTonomy.com).

+

+- Can I use nuScenes for 2d object detection?

+ - Objects in nuScenes are annotated in 3d.

+ - You can use [this script](https://github.com/nutonomy/nuscenes-devkit/blob/master/python-sdk/nuscenes/scripts/export_2d_annotations_as_json.py) to project them to 2d, but note that such 2d boxes are not generally tight.

+

+- How can I share my new dataset / paper for Autonomous Driving?

+ - Please contact [nuScenes@nuTonomy.com](mailto:nuScenes@nuTonomy.com) to discuss possible collaborations and listing your work on the [Publications](https://www.nuscenes.org/publications) page.

+ - To discuss it with the community, please post in the [nuScenes user forum](https://forum.nuscenes.org/).

\ No newline at end of file

diff --git a/docs/installation.md b/docs/installation.md

new file mode 100644

index 0000000..645317c

--- /dev/null

+++ b/docs/installation.md

@@ -0,0 +1,136 @@

+# Advanced Installation

+We provide step-by-step instructions to install our devkit. These instructions apply to both nuScenes and nuImages.

+- [Download](#download)

+- [Install Python](#install-python)

+- [Setup a Conda environment](#setup-a-conda-environment)

+- [Setup a virtualenvwrapper environment](#setup-a-virtualenvwrapper-environment)

+- [Setup PYTHONPATH](#setup-pythonpath)

+- [Install required packages](#install-required-packages)

+- [Setup environment variable](#setup-environment-variable)

+- [Setup Matplotlib backend](#setup-matplotlib-backend)

+- [Verify install](#verify-install)

+

+## Download

+

+Download the devkit to your home directory using:

+```

+cd && git clone https://github.com/nutonomy/nuscenes-devkit.git

+```

+## Install Python

+

+The devkit is tested for Python 3.6 onwards, but we recommend to use Python 3.7.

+For Ubuntu: If the right Python version is not already installed on your system, install it by running:

+```

+sudo apt install python-pip

+sudo add-apt-repository ppa:deadsnakes/ppa

+sudo apt-get update

+sudo apt-get install python3.7

+sudo apt-get install python3.7-dev

+```

+For Mac OS download and install from `https://www.python.org/downloads/mac-osx/`.

+

+## Setup a Conda environment

+Next we setup a Conda environment.

+An alternative to Conda is to use virtualenvwrapper, as described [below](#setup-a-virtualenvwrapper-environment).

+

+#### Install miniconda

+See the [official Miniconda page](https://conda.io/en/latest/miniconda.html).

+

+#### Setup a Conda environment

+We create a new Conda environment named `nuscenes`. We will use this environment for both nuScenes and nuImages.

+```

+conda create --name nuscenes python=3.7

+```

+

+#### Activate the environment

+If you are inside the virtual environment, your shell prompt should look like: `(nuscenes) user@computer:~$`

+If that is not the case, you can enable the virtual environment using:

+```

+conda activate nuscenes

+```

+To deactivate the virtual environment, use:

+```

+source deactivate

+```

+

+-----

+## Setup a virtualenvwrapper environment

+Another option for setting up a new virtual environment is to use virtualenvwrapper.

+**Skip these steps if you have already setup a Conda environment**.

+Follow these instructions to setup your environment.

+

+#### Install virtualenvwrapper

+To install virtualenvwrapper, run:

+```

+pip install virtualenvwrapper

+```

+Add the following two lines to `~/.bashrc` (`~/.bash_profile` on MAC OS) to set the location where the virtual environments should live and the location of the script installed with this package:

+```

+export WORKON_HOME=$HOME/.virtualenvs

+source [VIRTUAL_ENV_LOCATION]

+```

+Replace `[VIRTUAL_ENV_LOCATION]` with either `/usr/local/bin/virtualenvwrapper.sh` or `~/.local/bin/virtualenvwrapper.sh` depending on where it is installed on your system.

+After editing it, reload the shell startup file by running e.g. `source ~/.bashrc`.

+

+Note: If you are facing dependency issues with the PIP package, you can also install the devkit as a Conda package.

+For more details, see [this issue](https://github.com/nutonomy/nuscenes-devkit/issues/155).

+

+#### Create the virtual environment

+We create a new virtual environment named `nuscenes`.

+```

+mkvirtualenv nuscenes --python=python3.7

+```

+

+#### Activate the virtual environment

+If you are inside the virtual environment, your shell prompt should look like: `(nuscenes) user@computer:~$`

+If that is not the case, you can enable the virtual environment using:

+```

+workon nuscenes

+```

+To deactivate the virtual environment, use:

+```

+deactivate

+```

+

+## Setup PYTHONPATH

+Add the `python-sdk` directory to your `PYTHONPATH` environmental variable, by adding the following to your `~/.bashrc` (for virtualenvwrapper, you could alternatively add it in `~/.virtualenvs/nuscenes/bin/postactivate`):

+```

+export PYTHONPATH="${PYTHONPATH}:$HOME/nuscenes-devkit/python-sdk"

+```

+

+## Install required packages

+

+To install the required packages, run the following command in your favourite virtual environment:

+```

+pip install -r setup/requirements.txt

+```

+**Note:** The requirements file is internally divided into base requirements (`base`) and requirements specific to certain products or challenges (`nuimages`, `prediction` and `tracking`). If you only plan to use a subset of the codebase, feel free to comment out the lines that you do not need.

+

+## Setup environment variable

+Finally, if you want to run the unit tests you need to point the devkit to the `nuscenes` folder on your disk.

+Set the NUSCENES environment variable to point to your data folder:

+```

+export NUSCENES="/data/sets/nuscenes"

+```

+or for NUIMAGES:

+```

+export NUIMAGES="/data/sets/nuimages"

+```

+

+## Setup Matplotlib backend

+When using Matplotlib, it is generally recommended to define the backend used for rendering:

+1) Under Ubuntu the default backend `Agg` results in any plot not being rendered by default. This does not apply inside Jupyter notebooks.

+2) Under MacOSX a call to `plt.plot()` may fail with the following error (see [here](https://github.com/matplotlib/matplotlib/issues/13414) for more details):

+ ```

+ libc++abi.dylib: terminating with uncaught exception of type NSException

+ ```

+To set the backend, add the following to your `~/.matplotlib/matplotlibrc` file, which needs to be created if it does not exist yet:

+```

+backend: TKAgg

+```

+

+## Verify install

+To verify your environment run `python -m unittest` in the `python-sdk` folder.

+You can also run `assert_download.py` in the `python-sdk/nuscenes/tests` and `python-sdk/nuimages/tests` folders to verify that all files are in the right place.

+

+That's it you should be good to go!

diff --git a/docs/instructions_lidarseg.md b/docs/instructions_lidarseg.md

new file mode 100644

index 0000000..e4dd949

--- /dev/null

+++ b/docs/instructions_lidarseg.md

@@ -0,0 +1,149 @@

+# nuScenes-lidarseg Annotator Instructions

+

+# Overview

+- [Introduction](#introduction)

+- [General Instructions](#general-instructions)

+- [Detailed Instructions](#detailed-instructions)

+- [Classes](#classes)

+

+# Introduction

+In nuScenes-lidarseg, we annotate every point in the lidar pointcloud with a semantic label.

+All the labels from nuScenes are carried over into nuScenes-lidarseg; in addition, more ["stuff" (background) classes](#classes) have been included.

+Thus, nuScenes-lidarseg contains both foreground classes (pedestrians, vehicles, cyclists, etc.) and background classes (driveable surface, nature, buildings, etc.).

+

+

+# General Instructions

+ - Label each point with a class.

+ - Use the camera images to facilitate, check and validate the labels.

+ - Each point belongs to only one class, i.e., one class per point.

+

+

+# Detailed Instructions

++ **Extremities** such as vehicle doors, car mirrors and human limbs should be assigned the same label as the object.

+Note that in contrast to the nuScenes 3d cuboids, the lidarseg labels include car mirrors and antennas.

++ **Minimum number of points**

+ + An object can have as little as **one** point.

+ In such cases, that point should only be labeled if it is certain that the point belongs to a class

+ (with additional verification by looking at the corresponding camera frame).

+ Otherwise, the point should be labeled as `static.other`.

++ **Other static object vs noise.**

+ + **Other static object:** Points that belong to some physical object, but are not defined in our taxonomy.

+ + **Noise:** Points that do not correspond to physical objects or surfaces in the environment

+ (e.g. noise, reflections, dust, fog, raindrops or smoke).

++ **Terrain vs other flat.**

+ + **Terrain:** Grass, all kinds of horizontal vegetation, soil or sand. These areas are not meant to be driven on.

+ This label includes a possibly delimiting curb.

+ Single grass stalks do not need to be annotated and get the label of the region they are growing on.

+ + Short bushes / grass with **heights of less than 20cm**, should be labeled as terrain.

+ Similarly, tall bushes / grass which are higher than 20cm should be labeled as vegetation.

+ + **Other flat:** Horizontal surfaces which cannot be classified as ground plane / sidewalk / terrain, e.g., water.

++ **Terrain vs sidewalk**

+ + **Terrain:** See above.

+ + **Sidewalk:** A sidewalk is a walkway designed for pedestrians and / or cyclists. Sidewalks are always paved.

+

+

+# Classes

+The following classes are in **addition** to the existing ones in nuScenes:

+

+| Label ID | Label | Short Description |

+| --- | --- | --- |

+| 0 | [`noise`](#1-noise-class-0) | Any lidar return that does not correspond to a physical object, such as dust, vapor, noise, fog, raindrops, smoke and reflections. |

+| 24 | [`flat.driveable_surface`](#2-flatdriveable_surface-class-24) | All paved or unpaved surfaces that a car can drive on with no concern of traffic rules. |

+| 25 | [`flat.sidewalk`](#3-flatsidewalk-class-25) | Sidewalk, pedestrian walkways, bike paths, etc. Part of the ground designated for pedestrians or cyclists. Sidewalks do **not** have to be next to a road. |

+| 26 | [`flat.terrain`](#4-flatterrain-class-26) | Natural horizontal surfaces such as ground level horizontal vegetation (< 20 cm tall), grass, rolling hills, soil, sand and gravel. |

+| 27 | [`flat.other`](#5-flatother-class-27) | All other forms of horizontal ground-level structures that do not belong to any of driveable_surface, curb, sidewalk and terrain. Includes elevated parts of traffic islands, delimiters, rail tracks, stairs with at most 3 steps and larger bodies of water (lakes, rivers). |

+| 28 | [`static.manmade`](#6-staticmanmade-class-28) | Includes man-made structures but not limited to: buildings, walls, guard rails, fences, poles, drainages, hydrants, flags, banners, street signs, electric circuit boxes, traffic lights, parking meters and stairs with more than 3 steps. |

+| 29 | [`static.vegetation`](#7-staticvegetation-class-29) | Any vegetation in the frame that is higher than the ground, including bushes, plants, potted plants, trees, etc. Only tall grass (> 20cm) is part of this, ground level grass is part of `flat.terrain`.|

+| 30 | [`static.other`](#8-staticother-class-30) | Points in the background that are not distinguishable. Or objects that do not match any of the above labels. |

+| 31 | [`vehicle.ego`](#9-vehicleego-class-31) | The vehicle on which the cameras, radar and lidar are mounted, that is sometimes visible at the bottom of the image. |

+

+## Examples of classes

+Below are examples of the classes added in nuScenes-lidarseg.

+For simplicity, we only show lidar points which are relevant to the class being discussed.

+

+

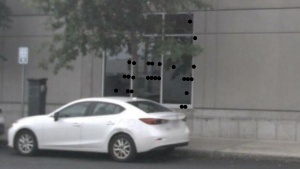

+### 1. noise (class 0)

+

+

+

+

+

+[Top](#classes)

+

+

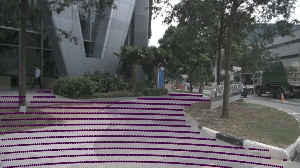

+### 2. flat.driveable_surface (class 24)

+

+

+

+

+

+[Top](#classes)

+

+

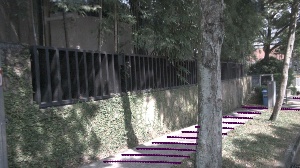

+### 3. flat.sidewalk (class 25)

+

+

+

+

+

+[Top](#classes)

+

+

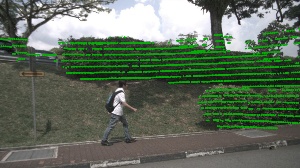

+### 4. flat.terrain (class 26)

+

+

+

+

+

+[Top](#classes)

+

+

+### 5. flat.other (class 27)

+

+

+

+

+

+[Top](#classes)

+

+

+### 6. static.manmade (class 28)

+

+

+

+

+

+[Top](#classes)

+

+

+### 7. static.vegetation (class 29)

+

+

+

+

+

+[Top](#classes)

+

+

+### 8. static.other (class 30)

+

+

+

+

+

+[Top](#classes)

+

+

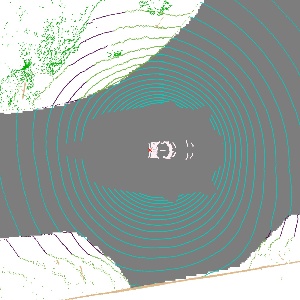

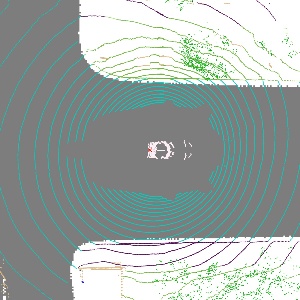

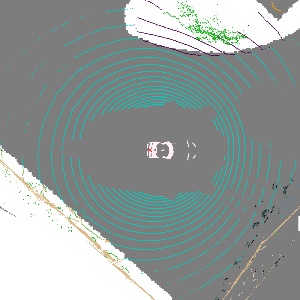

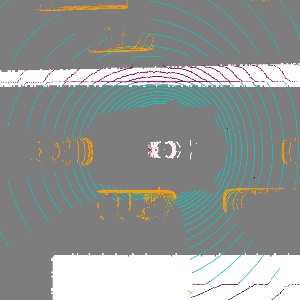

+### 9. vehicle.ego (class 31)

+Points on the ego vehicle generally arise due to self-occlusion, in which some lidar beams hit the ego vehicle.

+When the pointcloud is projected into a chosen camera image, the devkit removes points which are less than

+1m in front of the camera to prevent such points from cluttering the image. Thus, users will not see points

+belonging to `vehicle.ego` projected onto the camera images when using the devkit. To give examples, of the

+`vehicle.ego` class, the bird's eye view (BEV) is used instead:

+

+

+

+

+

+

+[Top](#classes)

diff --git a/docs/instructions_nuimages.md b/docs/instructions_nuimages.md

new file mode 100644

index 0000000..ba45bbf

--- /dev/null

+++ b/docs/instructions_nuimages.md

@@ -0,0 +1,160 @@

+# nuImages Annotator Instructions

+

+# Overview

+- [Introduction](#introduction)

+- [Objects](#objects)

+ - [Bounding Boxes](#bounding-boxes)

+ - [Instance Segmentation](#instance-segmentation)

+ - [Attributes](#attributes)

+- [Surfaces](#surfaces)

+ - [Semantic Segmentation](#semantic-segmentation)

+

+# Introduction

+In nuImages, we annotate objects with 2d boxes, instance masks and 2d segmentation masks. All the labels and attributes from nuScenes are carried over into nuImages.

+We have also [added more attributes](#attributes) in nuImages. For segmentation, we have included ["stuff" (background) classes](#surfaces).

+

+# Objects

+nuImages contains the [same object classes as nuScenes](https://github.com/nutonomy/nuscenes-devkit/tree/master/docs/instructions_nuscenes.md#labels),

+while the [attributes](#attributes) are a superset of the [attributes in nuScenes](https://github.com/nutonomy/nuscenes-devkit/tree/master/docs/instructions_nuscenes.md#attributes).

+

+## Bounding Boxes

+### General Instructions

+ - Draw bounding boxes around all objects that are in the list of [object classes](https://github.com/nutonomy/nuscenes-devkit/tree/master/docs/instructions_nuscenes.md#labels).

+ - Do not apply more than one box to a single object.

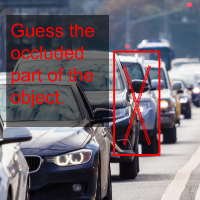

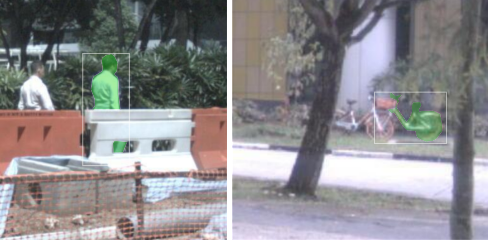

+ - If an object is occluded, then draw the bounding box to include the occluded part of the object according to your best guess.

+

+

+

+ - If an object is cut off at the edge of the image, then the bounding box should stop at the image boundary.

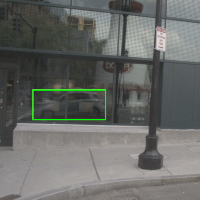

+ - If an object is reflected clearly in a glass window, then the reflection should be annotated.

+

+

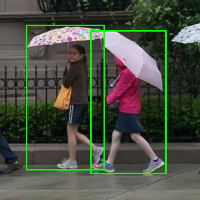

+ - If an object has extremities, the bounding box should include **all** the extremities (exceptions are the side view mirrors and antennas of vehicles).

+ Note that this differs [from how the instance masks are annotated](#instance-segmentation), in which the extremities are included in the masks.

+

+

+

+ - Only label objects if the object is clear enough to be certain of what it is. If an object is so blurry it cannot be known, do not label the object.

+ - Do not label an object if its height is less than 10 pixels.

+ - Do not label an object if its less than 20% visible, unless you can confidently tell what the object is.

+ An object can have low visibility when it is occluded or cut off by the image.

+ The clarity and orientation of the object does not influence its visibility.

+

+### Detailed Instructions

+ - `human.pedestrian.*`

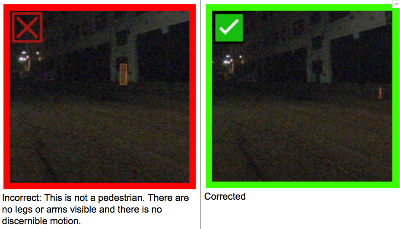

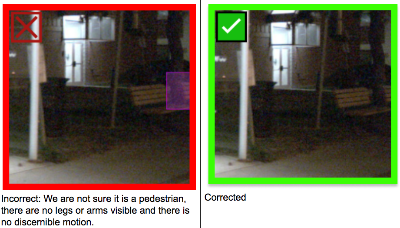

+ - In nighttime images, annotate the pedestrian only when either the body part(s) of a person is clearly visible (leg, arm, head etc.), or the person is clearly in motion.

+

+

+

+ - `vehicle.*`

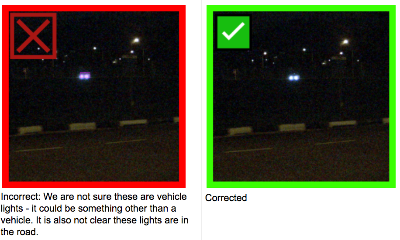

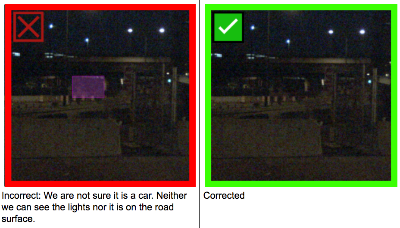

+ - In nighttime images, annotate a vehicle only when a pair of lights is clearly visible (break or head or hazard lights), and it is clearly on the road surface.

+

+

+

+

+

+[Top](#overview)

+

+## Instance Segmentation

+### General Instructions

+ - Given a bounding box, outline the **visible** parts of the object enclosed within the bounding box using a polygon.

+ - Each pixel on the image should be assigned to at most one object instance (i.e. the polygons should not overlap).

+ - There should not be a discrepancy of more than 2 pixels between the edge of the object instance and the polygon.

+ - If an object is occluded by another object whose width is less than 5 pixels (e.g. a thin fence), then the external object can be included in the polygon.

+

+

+ - If an object is loosely covered by another object (e.g. branches, bushes), do not create several polygons for visible areas that are less than 15 pixels in diameter.

+

+

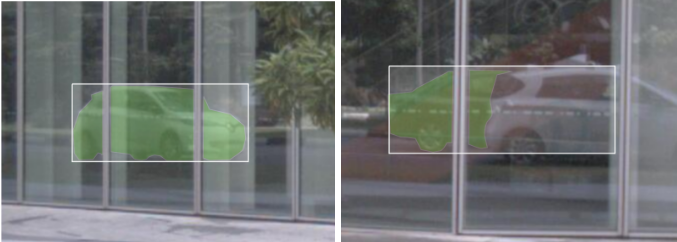

+ - If an object enclosed by the bounding box is occluded by another foreground object but has a visible area through a glass window (like for cars / vans / trucks),

+ do not create a polygon on that visible area.

+

+

+ - If an object has a visible area through a hole of another foreground object, create a polygon on the visible area.

+ Exemptions would be holes from bicycle / motorcycles / bike racks and holes that are less than 15 pixels diameter.

+

+

+ - If a static / moveable object has another object attached to it (signboard, rope), include it in the annotation.

+

+

+ - If parts of an object are not visible due to lighting and / or shadow, it is best to have an educated guess on the non-visible areas of the object.

+

+

+ - If an object is reflected clearly in a glass window, then the reflection should be annotated.

+

+

+

+### Detailed Instructions

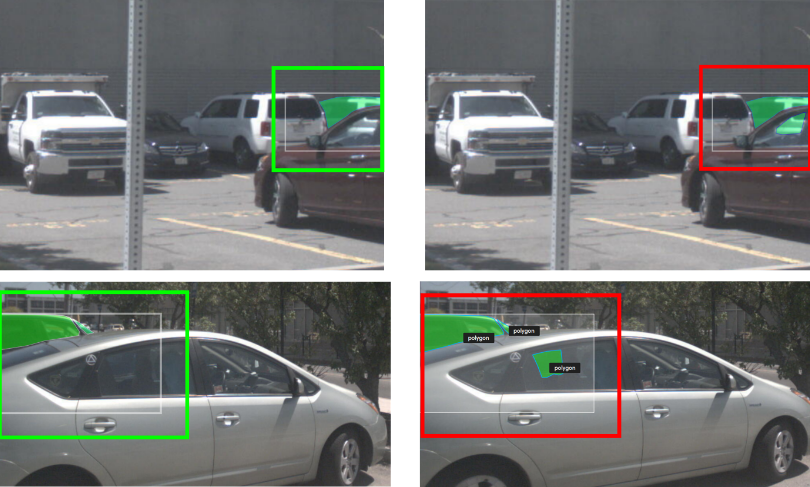

+ - `vehicle.*`

+ - Include extremities (e.g. side view mirrors, taxi heads, police sirens, etc.); exceptions are the crane arms on construction vehicles.

+

+

+

+ - `static_object.bicycle_rack`

+ - All bicycles in a bicycle rack should be annotated collectively as bicycle rack.

+ - **Note:** A previous version of this taxonomy did not include bicycle racks and therefore some images are missing bicycle rack annotations. We leave this class in the dataset, as it is merely an ignore label. The ignore label is used to avoid punishing false positives or false negatives on bicycle racks, where individual bicycles are difficult to identify.

+

+[Top](#overview)

+

+## Attributes

+In nuImages, each object comes with a box, a mask and a set of attributes.

+The following attributes are in **addition** to the [existing ones in nuScenes]((https://github.com/nutonomy/nuscenes-devkit/tree/master/docs/instructions_nuscenes.md#attributes)):

+

+| Attribute | Short Description |

+| --- | --- |

+| vehicle_light.emergency.flashing | The emergency lights on the vehicle are flashing. |

+| vehicle_light.emergency.not_flashing | The emergency lights on the vehicle are not flashing. |

+| vertical_position.off_ground | The object is not in the ground (e.g. it is flying, falling, jumping or positioned in a tree or on a vehicle). |

+| vertical_position.on_ground | The object is on the ground plane. |

+

+[Top](#overview)

+

+

+# Surfaces

+nuImages includes surface classes as well:

+

+| Label | Short Description |

+| --- | --- |

+| [`flat.driveable_surface`](#1-flatdriveable_surface) | All paved or unpaved surfaces that a car can drive on with no concern of traffic rules. |

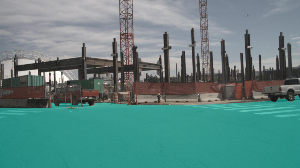

+| [`vehicle.ego`](#2-vehicleego) | The vehicle on which the sensors are mounted, that are sometimes visible at the bottom of the image. |

+

+### 1. flat.driveable_surface

+

+

+

+

+

+### 2. vehicle.ego

+

+

+

+

+

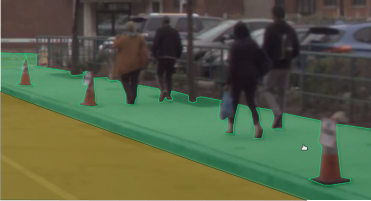

+## Semantic Segmentation

+### General Instructions

+ - Only annotate a surface if its length and width are **both** greater than 20 pixels.

+ - Annotations should tightly bound the edges of the area(s) of interest.

+

+

+ - If two areas/objects of interest are adjacent to each other, there should be no gap between the two annotations.

+

+

+ - Annotate a surface only as far as it is clearly visible.

+

+

+ - If a surface is occluded (e.g. by branches, trees, fence poles), only annotate the visible areas (which are more than 20 pixels in length and width).

+

+

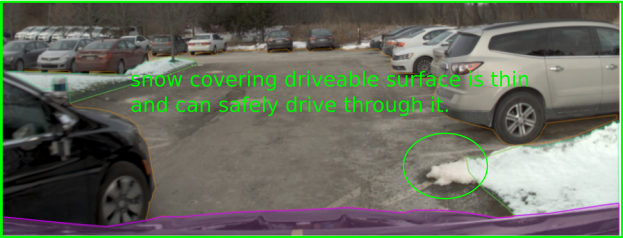

+ - If a surface is covered by dirt or snow of less than 20 cm in height, include the dirt or snow in the annotation (since it can be safely driven over).

+

+

+ - If a surface has puddles in it, always include them in the annotation.

+ - Do not annotate reflections of surfaces.

+

+### Detailed Instructions

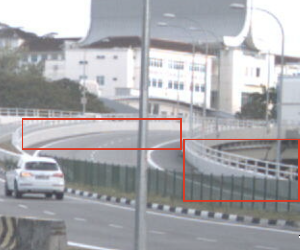

+ - `flat.driveable_surface`

+ - Include surfaces blocked by road blockers or pillars as long as they are the same surface as the driveable surface.

+

+

+

+[Top](#overview)

diff --git a/docs/instructions_nuscenes.md b/docs/instructions_nuscenes.md

new file mode 100644

index 0000000..96b8287

--- /dev/null

+++ b/docs/instructions_nuscenes.md

@@ -0,0 +1,373 @@

+# nuScenes Annotator Instructions

+

+# Overview

+- [Instructions](#instructions)

+- [Special Rules](#special-rules)

+- [Labels](#labels)

+- [Attributes](#attributes)

+- [Detailed Instructions and Examples](#detailed-instructions-and-examples)

+

+# Instructions

++ Draw 3D bounding boxes around all objects from the [labels](#labels) list, and label them according to the instructions below.

++ **Do not** apply more than one box to a single object.

++ Check every cuboid in every frame, to make sure all points are inside the cuboid and **look reasonable in the image view**.

++ For nighttime or rainy scenes, annotate objects as if these are daytime or normal weather scenes.

+

+# Special Rules

++ **Minimum number of points** :

+ + Label any target object containing **at least 1 LIDAR or RADAR point**, as long as you can be reasonably sure you know the location and shape of the object. Use your best judgment on correct cuboid position, sizing, and heading.

++ **Cuboid Sizing** :

+ + **Cuboids must be very tight.** Draw the cuboid as close as possible to the edge of the object without excluding any LIDAR points. There should be almost no visible space between the cuboid border and the closest point on the object.

++ **Extremities** :

+ + **If** an object has extremities (eg. arms and legs of pedestrians), **then** the bounding box should include the extremities.

+ + **Exception**: Do not include vehicle side view mirrors. Also, do not include other vehicle extremities (crane arms etc.) that are above 1.5 meters high.

++ **Carried Object** :

+ + If a pedestrian is carrying an object (bags, umbrellas, tools etc.), such object will be included in the bounding box for the pedestrian. If two or more pedestrians are carrying the same object, the bounding box of only one of them will include the object.

++ **Stationary Objects** :

+ + Sometimes stationary objects move over time due to errors in the localization. If a stationary object’s points shift over time, please create a separate cuboid for every frame.

++ **Use Pictures**:

+ + For objects with few LIDAR or RADAR points, use the images to make sure boxes are correctly sized. If you see that a cuboid is too short in the image view, adjust it to cover the entire object based on the image view.

++ **Visibility Attribute** :

+ + The visibility attribute specifies the percentage of object pixels visible in the panoramic view of all cameras.

+ +

+

+# Labels

+**For every bounding box, include one of the following labels:**

+1. **[Car or Van or SUV](#car-or-van-or-suv)**: Vehicle designed primarily for personal use, e.g. sedans, hatch-backs, wagons, vans, mini-vans, SUVs and jeeps.

+

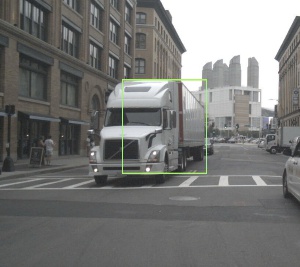

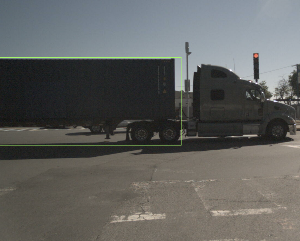

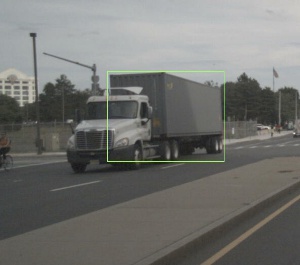

+2. **[Truck](#truck)**: Vehicles primarily designed to haul cargo including pick-ups, lorrys, trucks and semi-tractors. Trailers hauled after a semi-tractor should be labeled as "Trailer".

+

+ - **[Pickup Truck](#pickup-truck)**: A pickup truck is a light duty truck with an enclosed cab and an open or closed cargo area. A pickup truck can be intended primarily for hauling cargo or for personal use.

+

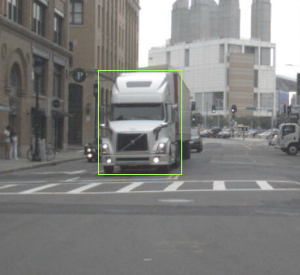

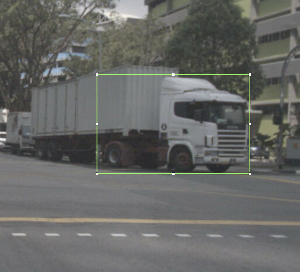

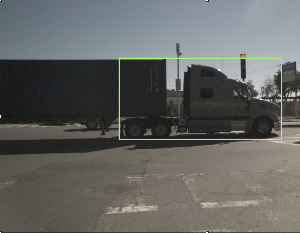

+ - **[Front Of Semi Truck](#front-of-semi-truck)**: Tractor part of a semi trailer truck. Trailers hauled after a semi-tractor should be labeled as a trailer.

+

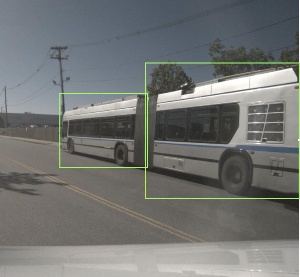

+5. **[Bendy Bus](#bendy-bus)**: Buses and shuttles designed to carry more than 10 people and comprises two or more rigid sections linked by a pivoting joint. Annotate each section of the bendy bus individually.

+

+6. **[Rigid Bus](#rigid-bus)**: Rigid buses and shuttles designed to carry more than 10 people.

+

+7. **[Construction Vehicle](#construction-vehicle)**: Vehicles primarily designed for construction. Typically very slow moving or stationary. Cranes and extremities of construction vehicles are only included in annotations if they interfere with traffic. Trucks used to hauling rocks or building materials are considered trucks rather than construction vehicles.

+

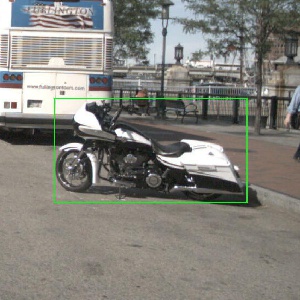

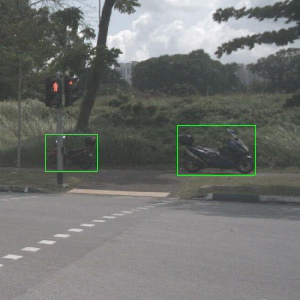

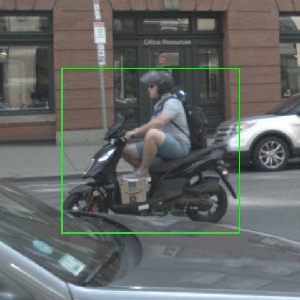

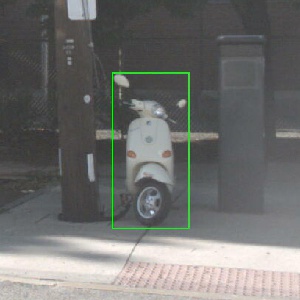

+8. **[Motorcycle](#motorcycle)**: Gasoline or electric powered 2-wheeled vehicle designed to move rapidly (at the speed of standard cars) on the road surface. This category includes all motorcycles, vespas and scooters. It also includes light 3-wheel vehicles, often with a light plastic roof and open on the sides, that tend to be common in Asia. If there is a rider and/or passenger, include them in the box.

+

+9. **[Bicycle](#bicycle)**: Human or electric powered 2-wheeled vehicle designed to travel at lower speeds either on road surface, sidewalks or bicycle paths. If there is a rider and/or passenger, include them in the box.

+

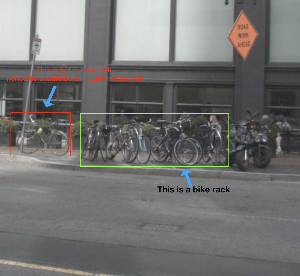

+10. **[Bicycle Rack](#bicycle-rack)**: Area or device intended to park or secure the bicycles in a row. It includes all the bicycles parked in it and any empty slots that are intended for parking bicycles. Bicycles that are not part of the rack should not be included. Instead they should be annotated as bicycles separately.

+

+11. **[Trailer](#trailer)**: Any vehicle trailer, both for trucks, cars and motorcycles (regardless of whether currently being towed or not). For semi-trailers (containers) label the truck itself as "Truck".

+

+12. **[Police Vehicle](#police-vehicle)**: All types of police vehicles including police bicycles and motorcycles.

+

+13. **[Ambulance](#ambulance)**: All types of ambulances.

+

+14. **[Adult Pedestrian](#adult-pedestrian)**: An adult pedestrian moving around the cityscape. Mannequins should also be annotated as Adult Pedestrian.

+

+15. **[Child Pedestrian](#child-pedestrian)**: A child pedestrian moving around the cityscape.

+

+16. **[Construction Worker](#construction-worker)**: A human in the scene whose main purpose is construction work.

+

+17. **[Stroller](#stroller)**: Any stroller. If a person is in the stroller, include in the annotation. If a pedestrian pushing the stroller, then they should be labeled separately.

+

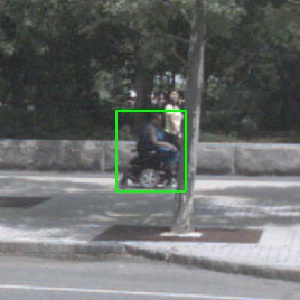

+18. **[Wheelchair](#wheelchair)**: Any type of wheelchair. If a pedestrian is pushing the wheelchair then they should be labeled separately.

+

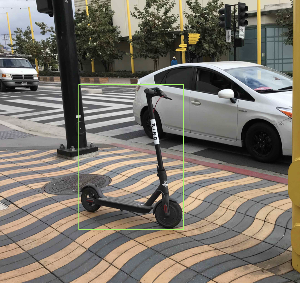

+19. **[Portable Personal Mobility Vehicle](#portable-personal-mobility-vehicle)**: A small electric or self-propelled vehicle, e.g. skateboard, segway, or scooters, on which the person typically travels in a upright position. Driver and (if applicable) rider should be included in the bounding box along with the vehicle.

+

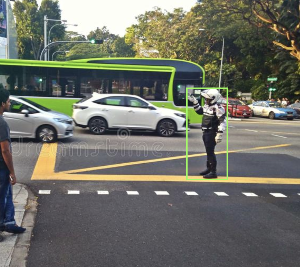

+20. **[Police Officer](#police-officer)**: Any type of police officer, regardless whether directing the traffic or not.

+

+21. **[Animal](#animal)**: All animals, e.g. cats, rats, dogs, deer, birds.

+

+22. **[Traffic Cone](#traffic-cone)**: All types of traffic cones.

+

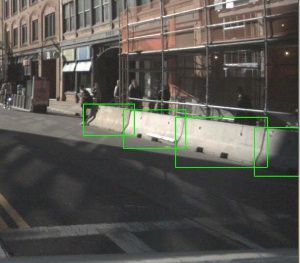

+23. **[Temporary Traffic Barrier](#temporary-traffic-barrier)**: Any metal, concrete or water barrier temporarily placed in the scene in order to re-direct vehicle or pedestrian traffic. In particular, includes barriers used at construction zones. If there are multiple barriers either connected or just placed next to each other, they should be annotated separately.

+

+24. **[Pushable Pullable Object](#pushable-pullable-object)**: Objects that a pedestrian may push or pull. For example dolleys, wheel barrows, garbage-bins with wheels, or shopping carts. Typically not designed to carry humans.

+

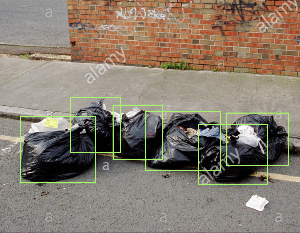

+25. **[Debris](#debris)**: Debris or movable object that is too large to be driven over safely. Includes misc. things like trash bags, temporary road-signs, objects around construction zones, and trash cans.

+

+# Attributes

+1. **For every object, include the attribute:**

+ + **Visibility**:

+ + **0%-40%**: The object is 0% to 40% visible in panoramic view of all cameras.

+ + **41%-60%**: The object is 41% to 60% visible in panoramic view of all cameras.

+ + **61%-80%**: The object is 61% to 80% visible in panoramic view of all cameras.

+ + **81%-100%**: The object is 81% to 100% visible in panoramic view of all cameras.

+ + This attribute specifies the percentage of an object visible through the cameras. For this estimation to be carried out, all the different camera views should be considered as one and the visible portion would be gauged in the resulting **panoramic view**

+ +

+2. **For each vehicle with four or more wheels, select the status:**

+ + **Vehicle Activity**:

+ + **Parked**: Vehicle is stationary (usually for longer duration) with no immediate intent to move.

+ + **Stopped**: Vehicle, with a driver/rider in/on it, is currently stationary but has an intent to move.

+ + **Moving**: Vehicle is moving.

+3. **For each bicycle, motorcycle and portable personal mobility vehicle, select the rider status.**

+ + **Has Rider**:

+ + **Yes**: There is a rider on the bicycle or motorcycle.

+ + **No**: There is NO rider on the bicycle or motorcycle.

+4. **For each human in the scene, select the status**

+ + **Human Activity**:

+ + **Sitting or Lying Down**: The human is sitting or lying down.

+ + **Standing**: The human is standing.

+ + **Moving**: The human is moving.

+

+<br><br><br>

+ # Detailed Instructions and Examples

+

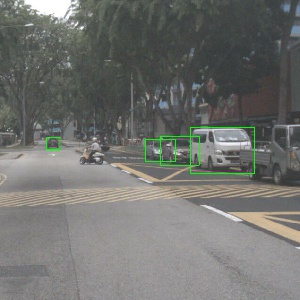

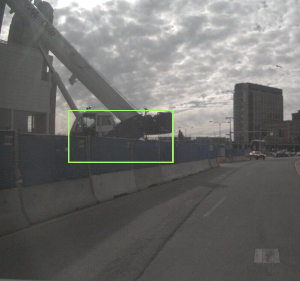

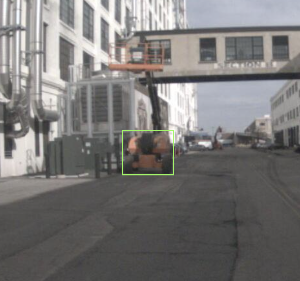

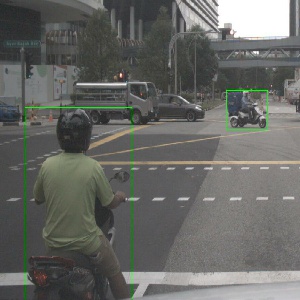

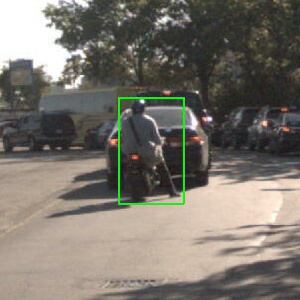

+Bounding Box color convention in example images:

+ + **Green**: Objects like this should be annotated

+ + **Red**: Objects like this should not be annotated

+

+

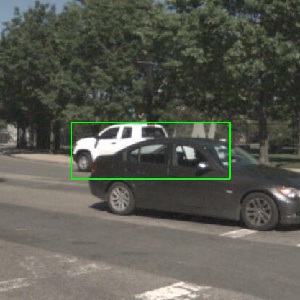

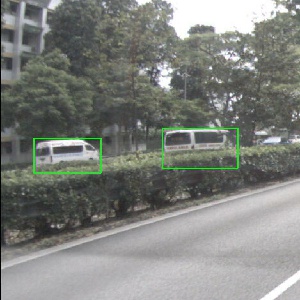

+## Car or Van or SUV

++ Vehicle designed primarily for personal use, e.g. sedans, hatch-backs, wagons, vans, mini-vans, SUVs and jeeps.

+ + If the vehicle is designed to carry more than 10 people label it is a bus.

+ + If it is primarily designed to haul cargo it is a truck.

+

+

+

+

+ [Top](#overview)

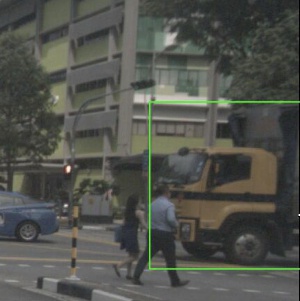

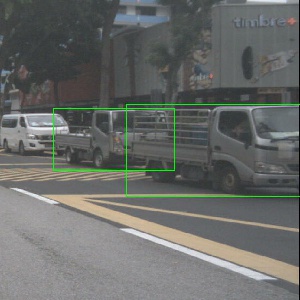

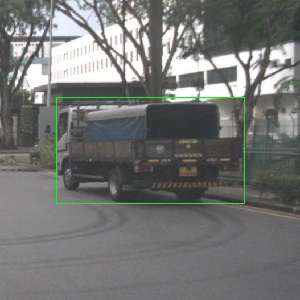

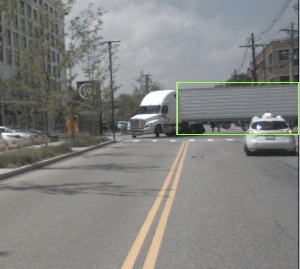

+## Truck

++ Vehicles primarily designed to haul cargo including pick-ups, lorrys, trucks and semi-tractors. Trailers hauled after a semi-tractor should be labeled as vehicle.trailer.

+

+

+

+

+

+

+**Pickup Truck**

++ A pickup truck is a light duty truck with an enclosed cab and an open or closed cargo area. A pickup truck can be intended primarily for hauling cargo or for personal use.

+

+

+

+

+

+

+**Front Of Semi Truck**

++ Tractor part of a semi trailer truck. Trailers hauled after a semi-tractor should be labeled as a trailer.

+

+

+

+

+

+

+

+

+ [Top](#overview)

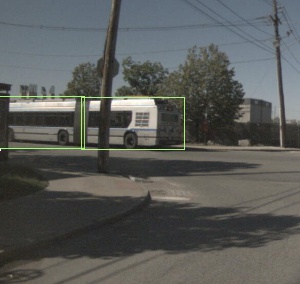

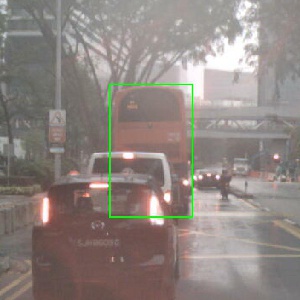

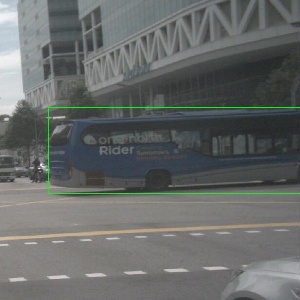

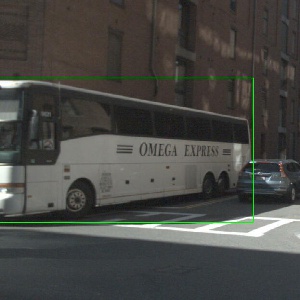

+## Bendy Bus

++ Buses and shuttles designed to carry more than 10 people and comprises two or more rigid sections linked by a pivoting joint.

+ + Annotate each section of the bendy bus individually.

+ + If you cannot see the pivoting joint of the bus, annotate it as **rigid bus**.

+

+

+

+

+

+

+ [Top](#overview)

+## Rigid Bus

++ Rigid buses and shuttles designed to carry more than 10 people.

+

+

+

+

+

+

+ [Top](#overview)

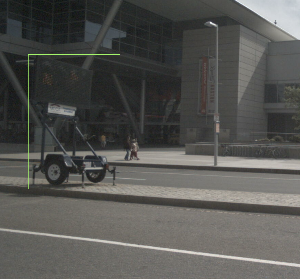

+## Construction Vehicle

++ Vehicles primarily designed for construction. Typically very slow moving or stationary.

+ + Trucks used to hauling rocks or building materials are considered as truck rather than construction vehicles.

+ + Cranes and extremities of construction vehicles are only included in annotations if they interferes with traffic.

+

+

+

+

+

+

+

+ [Top](#overview)

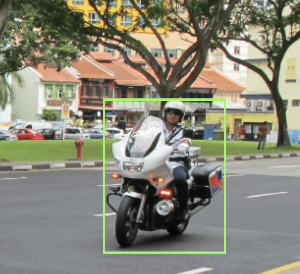

+## Motorcycle

++ Gasoline or electric powered 2-wheeled vehicle designed to move rapidly (at the speed of standard cars) on the road surface. This category includes all motorcycles, vespas and scooters. It also includes light 3-wheel vehicles, often with a light plastic roof and open on the sides, that tend to be common in Asia.

+ + If there is a rider, include the rider in the box.

+ + If there is a passenger, include the passenger in the box.

+ + If there is a pedestrian standing next to the motorcycle, do NOT include in the annotation.

+

+

+

+

+

+

+

+

+

+

+ [Top](#overview)

+## Bicycle

++ Human or electric powered 2-wheeled vehicle designed to travel at lower speeds either on road surface, sidewalks or bicycle paths.

+ + If there is a rider, include the rider in the box

+ + If there is a passenger, include the passenger in the box

+ + If there is a pedestrian standing next to the bicycle, do NOT include in the annotation

+

+

+

+

+

+

+ [Top](#overview)

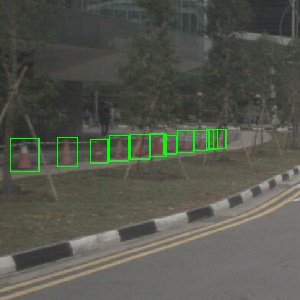

+## Bicycle Rack

++ Area or device intended to park or secure the bicycles in a row. It includes all the bicycles parked in it and any empty slots that are intended for parking bicycles.

+ + Bicycles that are not part of the rack should not be included. Instead they should be annotated as bicycles separately.

+

+

+

+

+

+

+

+

+ [Top](#overview)

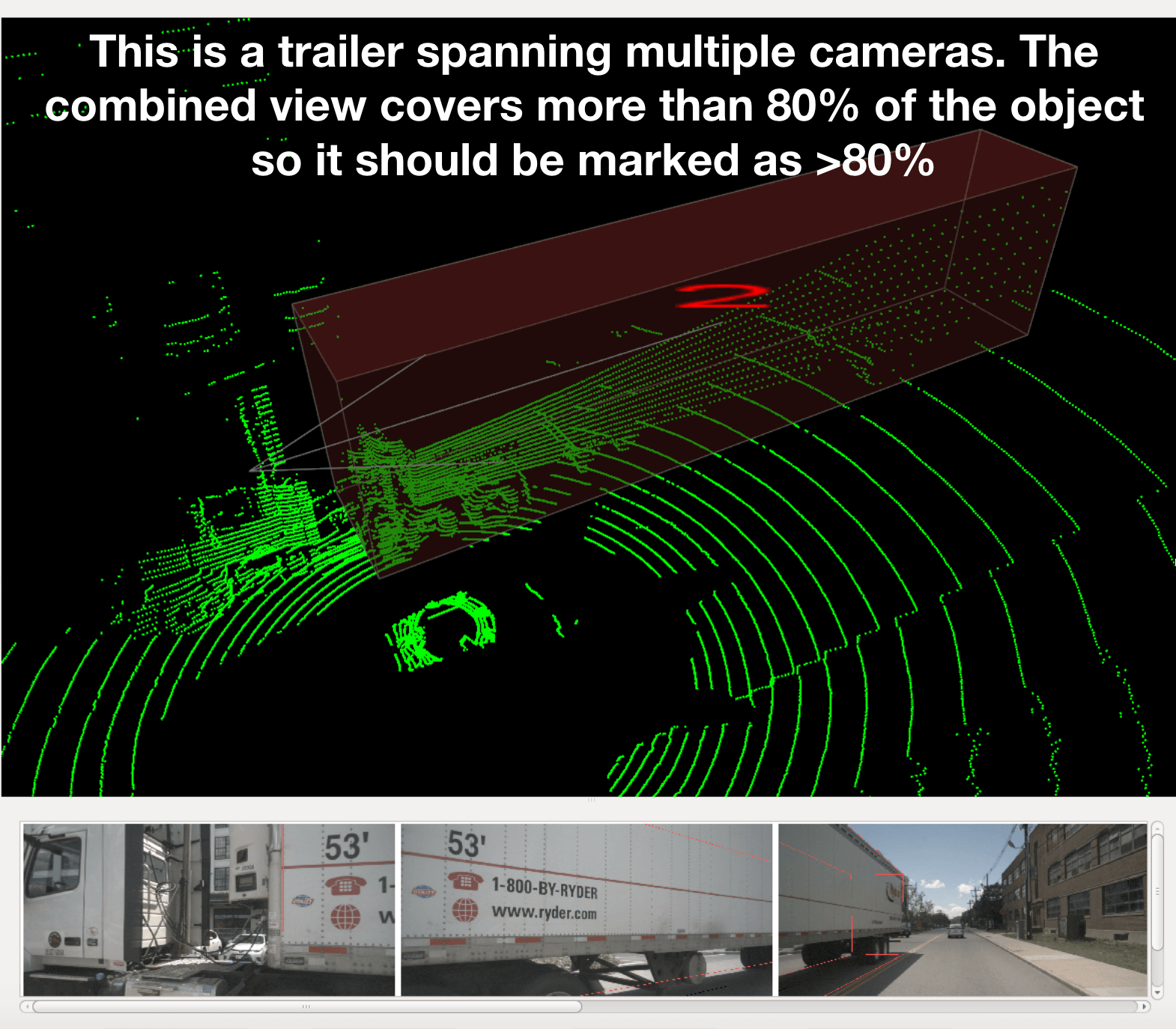

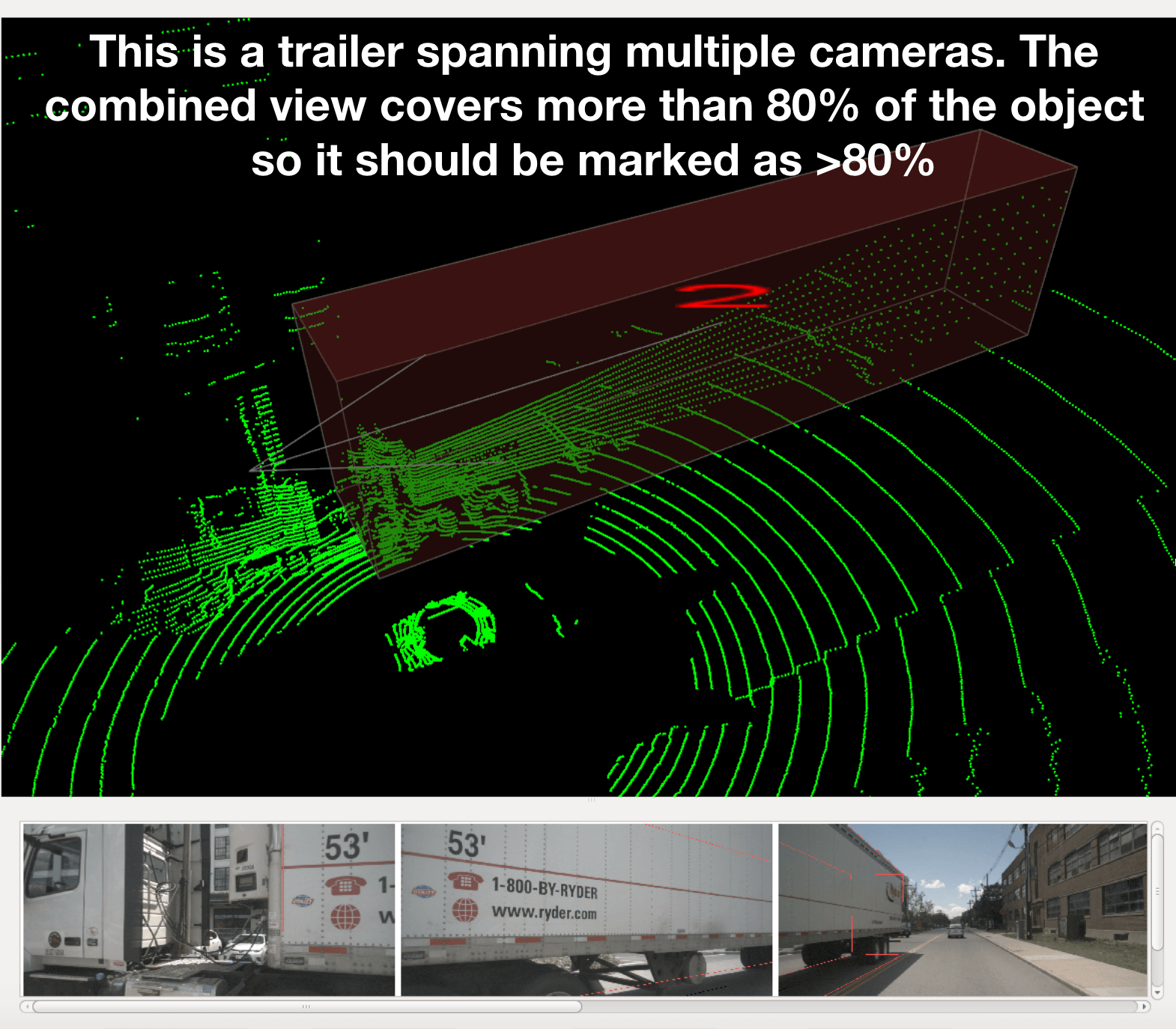

+## Trailer

++ Any vehicle trailer, both for trucks, cars and motorcycles (regardless of whether currently being towed or not). For semi-trailers (containers) label the truck itself as "front of semi truck".

+ + A vehicle towed by another vehicle should be labeled as vehicle (not as trailer).

+

+

+

+

+

+

+

+

+

+

+

+

+ [Top](#overview)

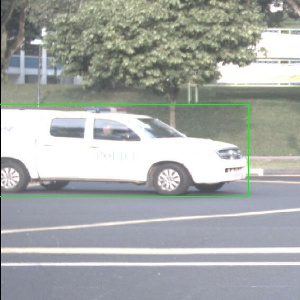

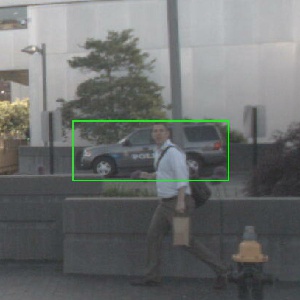

+## Police Vehicle

++ All types of police vehicles including police bicycles and motorcycles.

+

+

+

+

+

+

+

+ [Top](#overview)

+## Ambulance

++ All types of ambulances.

+

+

+

+

+ [Top](#overview)

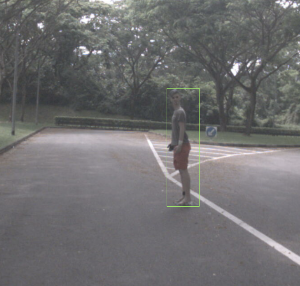

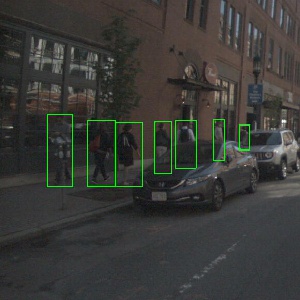

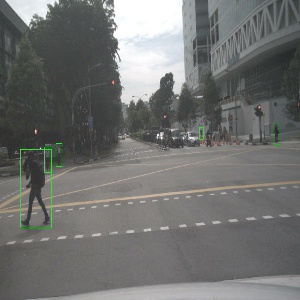

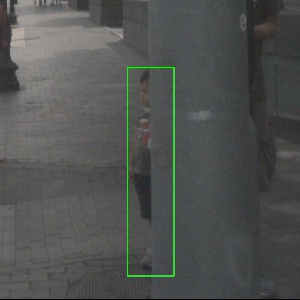

+## Adult Pedestrian

++ An adult pedestrian moving around the cityscape.

+ + Mannequins should also be treated as adult pedestrian.

+

+

+

+

+

+

+

+ [Top](#overview)

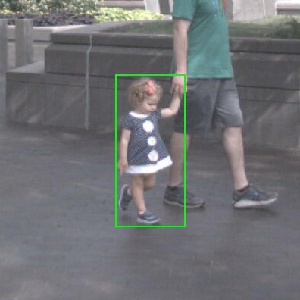

+## Child Pedestrian

++ A child pedestrian moving around the cityscape.

+

+

+

+

+

+

+ [Top](#overview)

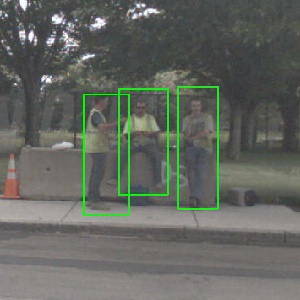

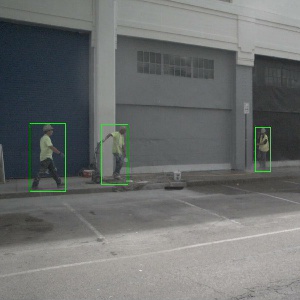

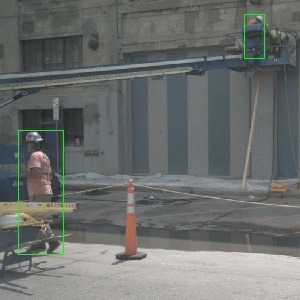

+## Construction Worker

++ A human in the scene whose main purpose is construction work.

+

+

+

+

+

+

+ [Top](#overview)

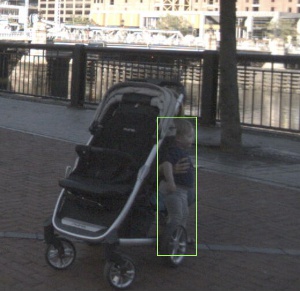

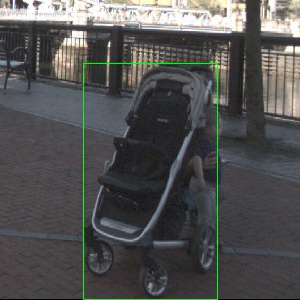

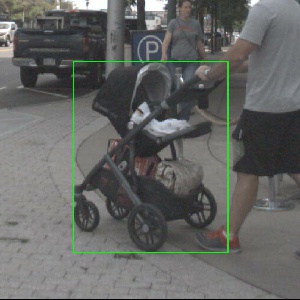

+## Stroller

++ Any stroller

+ + If a person is in the stroller, include in the annotation.

+ + Pedestrians pushing strollers should be labeled separately.

+

+

+

+

+

+

+ [Top](#overview)

+## Wheelchair

++ Any type of wheelchair

+ + If a person is in the wheelchair, include in the annotation.

+ + Pedestrians pushing wheelchairs should be labeled separately.

+

+

+

+

+

+ [Top](#overview)

+## Portable Personal Mobility Vehicle

++ A small electric or self-propelled vehicle, e.g. skateboard, segway, or scooters, on which the person typically travels in a upright position. Driver and (if applicable) rider should be included in the bounding box along with the vehicle.

+

+

+

+

+ [Top](#overview)

+## Police Officer

++ Any type of police officer, regardless whether directing the traffic or not.

+

+

+

+

+

+

+ [Top](#overview)

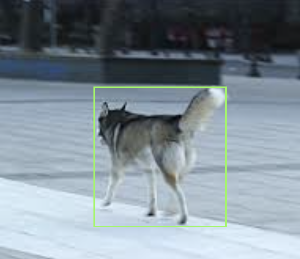

+## Animal

++ All animals, e.g. cats, rats, dogs, deer, birds.

+

+

+

+

+

+

+

+ [Top](#overview)

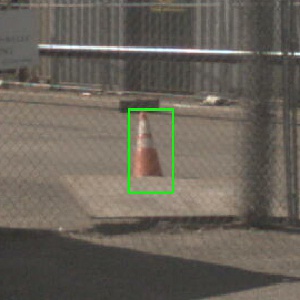

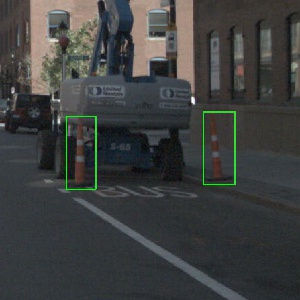

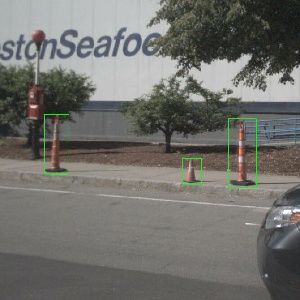

+## Traffic Cone

++ All types of traffic cones.

+

+

+

+

+

+

+

+ [Top](#overview)

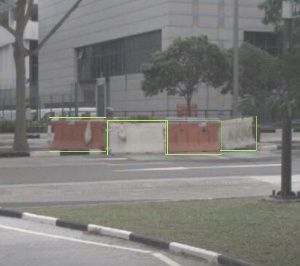

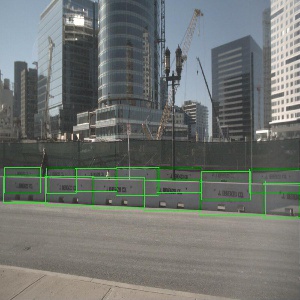

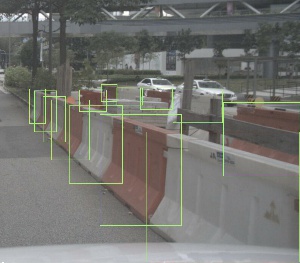

+## Temporary Traffic Barrier

++ Any metal, concrete or water barrier temporarily placed in the scene in order to re-direct vehicle or pedestrian traffic. In particular, includes barriers used at construction zones.

+ + If there are multiple barriers either connected or just placed next to each other, they should be annotated separately.

+ + If barriers are installed permanently, then do NOT include them.

+

+

+

+

+

+

+

+

+ [Top](#overview)

+## Pushable Pullable Object

++ Objects that a pedestrian may push or pull. For example dolleys, wheel barrows, garbage-bins with wheels, or shopping carts. Typically not designed to carry humans.

+

+

+

+

+

+

+ [Top](#overview)

+## Debris

++ Debris or movable object that is left **on the driveable surface** that is too large to be driven over safely, e.g tree branch, full trash bag etc.

+

+

+

+

+ [Top](#overview)

diff --git a/docs/schema_nuimages.md b/docs/schema_nuimages.md

new file mode 100644

index 0000000..172196f

--- /dev/null

+++ b/docs/schema_nuimages.md

@@ -0,0 +1,162 @@

+nuImages schema

+==========

+This document describes the database schema used in nuImages.

+All annotations and meta data (including calibration, maps, vehicle coordinates etc.) are covered in a relational database.

+The database tables are listed below.

+Every row can be identified by its unique primary key `token`.

+Foreign keys such as `sample_token` may be used to link to the `token` of the table `sample`.

+Please refer to the [tutorial](https://www.nuscenes.org/nuimages#tutorials) for an introduction to the most important database tables.

+

+

+

+attribute

+---------

+An attribute is a property of an instance that can change while the category remains the same.

+Example: a vehicle being parked/stopped/moving, and whether or not a bicycle has a rider.

+The attributes in nuImages are a superset of those in nuScenes.

+```

+attribute {

+ "token": <str> -- Unique record identifier.

+ "name": <str> -- Attribute name.

+ "description": <str> -- Attribute description.

+}

+```

+

+calibrated_sensor

+---------

+Definition of a particular camera as calibrated on a particular vehicle.

+All extrinsic parameters are given with respect to the ego vehicle body frame.

+Contrary to nuScenes, all camera images come distorted and unrectified.

+```

+calibrated_sensor {

+ "token": <str> -- Unique record identifier.

+ "sensor_token": <str> -- Foreign key pointing to the sensor type.

+ "translation": <float> [3] -- Coordinate system origin in meters: x, y, z.

+ "rotation": <float> [4] -- Coordinate system orientation as quaternion: w, x, y, z.

+ "camera_intrinsic": <float> [3, 3] -- Intrinsic camera calibration. Empty for sensors that are not cameras.

+ "camera_distortion": <float> [5 or 6] -- Camera calibration parameters [k1, k2, p1, p2, k3, k4]. We use the 5 parameter camera convention of the CalTech camera calibration toolbox, that is also used in OpenCV. Only for fish-eye lenses in CAM_BACK do we use the 6th parameter (k4).

+}

+```

+

+category

+---------

+Taxonomy of object categories (e.g. vehicle, human).

+Subcategories are delineated by a period (e.g. `human.pedestrian.adult`).

+The categories in nuImages are the same as in nuScenes (w/o lidarseg), plus `flat.driveable_surface`.

+```

+category {

+ "token": <str> -- Unique record identifier.

+ "name": <str> -- Category name. Subcategories indicated by period.

+ "description": <str> -- Category description.

+}

+```

+

+ego_pose

+---------

+Ego vehicle pose at a particular timestamp. Given with respect to global coordinate system of the log's map.

+The ego_pose is the output of a lidar map-based localization algorithm described in our paper.

+The localization is 2-dimensional in the x-y plane.

+Warning: nuImages is collected from almost 500 logs with different maps versions.

+Therefore the coordinates **should not be compared across logs** or rendered on the semantic maps of nuScenes.

+```

+ego_pose {

+ "token": <str> -- Unique record identifier.

+ "translation": <float> [3] -- Coordinate system origin in meters: x, y, z. Note that z is always 0.

+ "rotation": <float> [4] -- Coordinate system orientation as quaternion: w, x, y, z.

+ "timestamp": <int> -- Unix time stamp.

+ "rotation_rate": <float> [3] -- The angular velocity vector (x, y, z) of the vehicle in rad/s. This is expressed in the ego vehicle frame.

+ "acceleration": <float> [3] -- Acceleration vector (x, y, z) in the ego vehicle frame in m/s/s. The z value is close to the gravitational acceleration `g = 9.81 m/s/s`.

+ "speed": <float> -- The speed of the ego vehicle in the driving direction in m/s.

+}

+```

+

+log

+---------

+Information about the log from which the data was extracted.

+```

+log {

+ "token": <str> -- Unique record identifier.

+ "logfile": <str> -- Log file name.

+ "vehicle": <str> -- Vehicle name.

+ "date_captured": <str> -- Date (YYYY-MM-DD).

+ "location": <str> -- Area where log was captured, e.g. singapore-onenorth.

+}

+```

+

+object_ann

+---------

+The annotation of a foreground object (car, bike, pedestrian) in an image.

+Each foreground object is annotated with a 2d box, a 2d instance mask and category-specific attributes.

+```

+object_ann {

+ "token": <str> -- Unique record identifier.

+ "sample_data_token": <str> -- Foreign key pointing to the sample data, which must be a keyframe image.

+ "category_token": <str> -- Foreign key pointing to the object category.

+ "attribute_tokens": <str> [n] -- Foreign keys. List of attributes for this annotation.

+ "bbox": <int> [4] -- Annotated amodal bounding box. Given as [xmin, ymin, xmax, ymax].

+ "mask": <RLE> -- Run length encoding of instance mask using the pycocotools package.

+}

+```

+

+sample_data

+---------

+Sample_data contains the images and information about when they were captured.

+Sample_data covers all images, regardless of whether they are a keyframe or not.

+Only keyframes are annotated.

+For every keyframe, we also include up to 6 past and 6 future sweeps at 2 Hz.

+We can navigate between consecutive images using the `prev` and `next` pointers.

+The sample timestamp is inherited from the keyframe camera sample_data timestamp.

+```

+sample_data {

+ "token": <str> -- Unique record identifier.

+ "sample_token": <str> -- Foreign key. Sample to which this sample_data is associated.

+ "ego_pose_token": <str> -- Foreign key.

+ "calibrated_sensor_token": <str> -- Foreign key.

+ "filename": <str> -- Relative path to data-blob on disk.

+ "fileformat": <str> -- Data file format.

+ "width": <int> -- If the sample data is an image, this is the image width in pixels.

+ "height": <int> -- If the sample data is an image, this is the image height in pixels.

+ "timestamp": <int> -- Unix time stamp.

+ "is_key_frame": <bool> -- True if sample_data is part of key_frame, else False.

+ "next": <str> -- Foreign key. Sample data from the same sensor that follows this in time. Empty if end of scene.

+ "prev": <str> -- Foreign key. Sample data from the same sensor that precedes this in time. Empty if start of scene.

+}

+```

+

+sample

+---------

+A sample is an annotated keyframe selected from a large pool of images in a log.

+Every sample has up to 13 camera sample_datas corresponding to it.

+These include the keyframe, which can be accessed via `key_camera_token`.

+```

+sample {

+ "token": <str> -- Unique record identifier.

+ "timestamp": <int> -- Unix time stamp.

+ "log_token": <str> -- Foreign key pointing to the log.

+ "key_camera_token": <str> -- Foreign key of the sample_data corresponding to the camera keyframe.

+}

+```

+

+sensor

+---------

+A specific sensor type.

+```

+sensor {

+ "token": <str> -- Unique record identifier.

+ "channel": <str> -- Sensor channel name.

+ "modality": <str> -- Sensor modality. Always "camera" in nuImages.

+}

+```

+

+surface_ann

+---------

+The annotation of a background object (driveable surface) in an image.

+Each background object is annotated with a 2d semantic segmentation mask.

+```

+surface_ann {

+ "token": <str> -- Unique record identifier.

+ "sample_data_token": <str> -- Foreign key pointing to the sample data, which must be a keyframe image.

+ "category_token": <str> -- Foreign key pointing to the surface category.

+ "mask": <RLE> -- Run length encoding of segmentation mask using the pycocotools package.

+}

+```

diff --git a/docs/schema_nuscenes.md b/docs/schema_nuscenes.md

new file mode 100644

index 0000000..e69415c

--- /dev/null

+++ b/docs/schema_nuscenes.md

@@ -0,0 +1,211 @@

+nuScenes schema

+==========

+This document describes the database schema used in nuScenes.

+All annotations and meta data (including calibration, maps, vehicle coordinates etc.) are covered in a relational database.

+The database tables are listed below.

+Every row can be identified by its unique primary key `token`.

+Foreign keys such as `sample_token` may be used to link to the `token` of the table `sample`.

+Please refer to the [tutorial](https://www.nuscenes.org/nuimages#tutorial) for an introduction to the most important database tables.

+

+

+

+attribute

+---------

+An attribute is a property of an instance that can change while the category remains the same.

+Example: a vehicle being parked/stopped/moving, and whether or not a bicycle has a rider.

+```

+attribute {

+ "token": <str> -- Unique record identifier.

+ "name": <str> -- Attribute name.

+ "description": <str> -- Attribute description.

+}

+```

+

+calibrated_sensor

+---------

+Definition of a particular sensor (lidar/radar/camera) as calibrated on a particular vehicle.

+All extrinsic parameters are given with respect to the ego vehicle body frame.

+All camera images come undistorted and rectified.

+```

+calibrated_sensor {

+ "token": <str> -- Unique record identifier.

+ "sensor_token": <str> -- Foreign key pointing to the sensor type.

+ "translation": <float> [3] -- Coordinate system origin in meters: x, y, z.

+ "rotation": <float> [4] -- Coordinate system orientation as quaternion: w, x, y, z.

+ "camera_intrinsic": <float> [3, 3] -- Intrinsic camera calibration. Empty for sensors that are not cameras.

+}

+```

+

+category

+---------

+Taxonomy of object categories (e.g. vehicle, human).

+Subcategories are delineated by a period (e.g. `human.pedestrian.adult`).

+```

+category {

+ "token": <str> -- Unique record identifier.

+ "name": <str> -- Category name. Subcategories indicated by period.

+ "description": <str> -- Category description.

+ "index": <int> -- The index of the label used for efficiency reasons in the .bin label files of nuScenes-lidarseg. This field did not exist previously.

+}

+```

+

+ego_pose

+---------

+Ego vehicle pose at a particular timestamp. Given with respect to global coordinate system of the log's map.

+The ego_pose is the output of a lidar map-based localization algorithm described in our paper.

+The localization is 2-dimensional in the x-y plane.

+```

+ego_pose {

+ "token": <str> -- Unique record identifier.

+ "translation": <float> [3] -- Coordinate system origin in meters: x, y, z. Note that z is always 0.

+ "rotation": <float> [4] -- Coordinate system orientation as quaternion: w, x, y, z.

+ "timestamp": <int> -- Unix time stamp.

+}

+```

+

+instance

+---------

+An object instance, e.g. particular vehicle.

+This table is an enumeration of all object instances we observed.

+Note that instances are not tracked across scenes.

+```

+instance {

+ "token": <str> -- Unique record identifier.

+ "category_token": <str> -- Foreign key pointing to the object category.

+ "nbr_annotations": <int> -- Number of annotations of this instance.

+ "first_annotation_token": <str> -- Foreign key. Points to the first annotation of this instance.

+ "last_annotation_token": <str> -- Foreign key. Points to the last annotation of this instance.

+}

+```

+

+lidarseg

+---------

+Mapping between nuScenes-lidarseg annotations and sample_datas corresponding to the lidar pointcloud associated with a keyframe.

+```

+lidarseg {

+ "token": <str> -- Unique record identifier.

+ "filename": <str> -- The name of the .bin files containing the nuScenes-lidarseg labels. These are numpy arrays of uint8 stored in binary format using numpy.

+ "sample_data_token": <str> -- Foreign key. Sample_data corresponding to the annotated lidar pointcloud with is_key_frame=True.

+}

+```

+

+log

+---------

+Information about the log from which the data was extracted.

+```

+log {

+ "token": <str> -- Unique record identifier.

+ "logfile": <str> -- Log file name.

+ "vehicle": <str> -- Vehicle name.

+ "date_captured": <str> -- Date (YYYY-MM-DD).

+ "location": <str> -- Area where log was captured, e.g. singapore-onenorth.

+}

+```

+

+map

+---------

+Map data that is stored as binary semantic masks from a top-down view.

+```

+map {

+ "token": <str> -- Unique record identifier.

+ "log_tokens": <str> [n] -- Foreign keys.

+ "category": <str> -- Map category, currently only semantic_prior for drivable surface and sidewalk.

+ "filename": <str> -- Relative path to the file with the map mask.

+}

+```

+

+sample

+---------

+A sample is an annotated keyframe at 2 Hz.

+The data is collected at (approximately) the same timestamp as part of a single LIDAR sweep.

+```

+sample {

+ "token": <str> -- Unique record identifier.

+ "timestamp": <int> -- Unix time stamp.

+ "scene_token": <str> -- Foreign key pointing to the scene.

+ "next": <str> -- Foreign key. Sample that follows this in time. Empty if end of scene.

+ "prev": <str> -- Foreign key. Sample that precedes this in time. Empty if start of scene.

+}

+```

+

+sample_annotation

+---------

+A bounding box defining the position of an object seen in a sample.

+All location data is given with respect to the global coordinate system.

+```

+sample_annotation {